Shuo Cai (蔡硕) is a Master of Philosophy student in the Department of Computing at The Hong Kong Polytechnic University (PolyU), supervised by Prof. Hongxia Yang. His research interests focus on efficient post-training of Large Language Models (LLMs). He is currently working on enhancing LLM reasoning capabilities in coding and mathematical tasks, with a focus on alignment, low resources, and scalable adaptation.

📝 Publications

InfiAlign: A Scalable and Sample-Efficient Framework for Aligning LLMs to Enhance Reasoning Capabilities

Shuo Cai, Su Lu, Qi Zhou, Kejing Yang, Zhijie Sang, Congkai Xie, Hongxia Yang

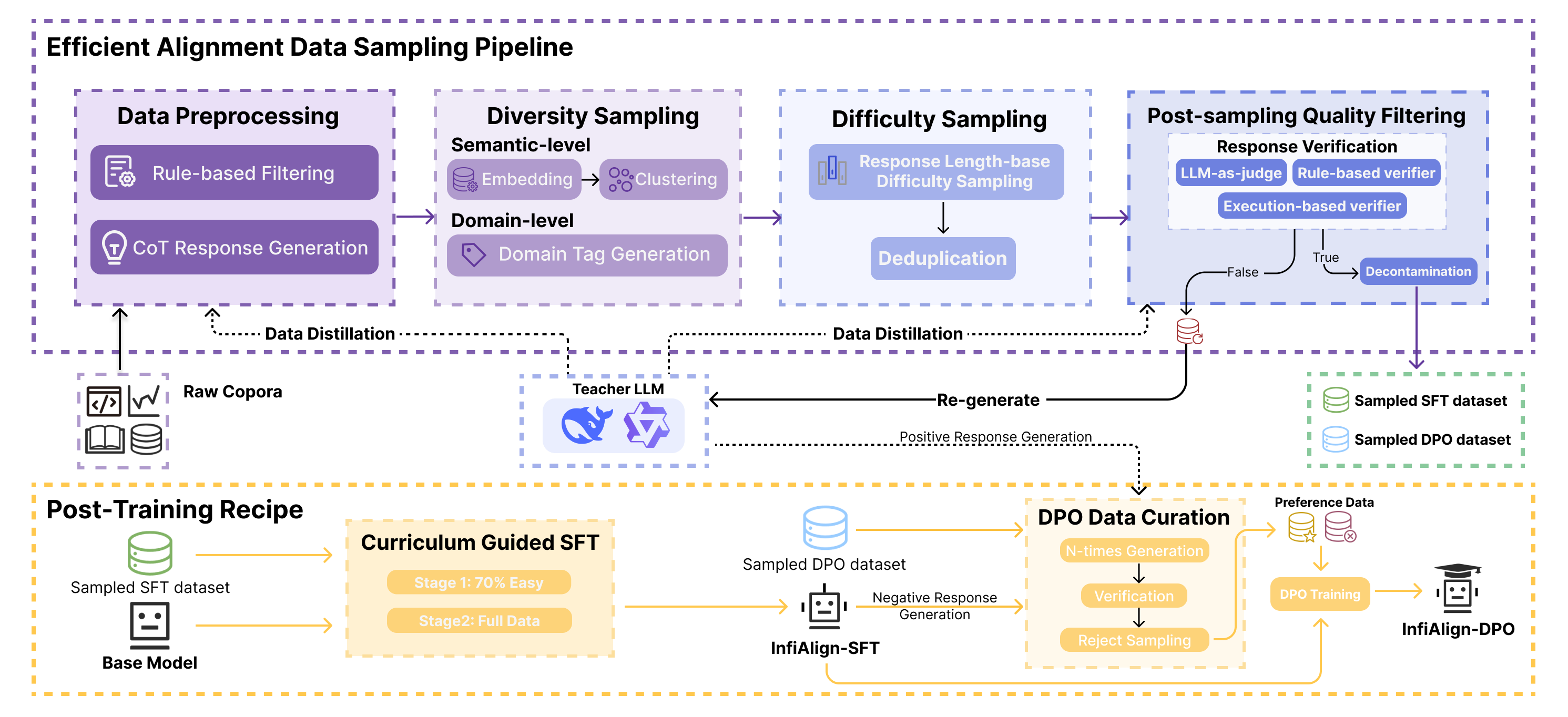

- This paper introduces InfiAlign, a scalable and sample-efficient post-training framework that enhances the reasoning capabilities of large language models while sharply reducing data and computational costs. Combining supervised fine-tuning (SFT) with Direct Preference Optimization (DPO), InfiAlign employs an automated data selection pipeline that curates high-quality alignment data from diverse open-source reasoning corpora using multi-dimensional metrics for diversity, difficulty, and quality. Applied to the Qwen2.5-Math-7B-Base model, it achieves performance on par with or surpassing leading distilled baselines such as R1-Distill-Qwen-7B, while using only 12% of their training data—showcasing that principled alignment strategies can deliver state-of-the-art results without prohibitive resource demands.

InfiR : Crafting Effective Small Language Models and Multimodal Small Language Models in Reasoning

Congkai Xie, Shuo Cai, Wenjun Wang, Pengxiang Li, Zhijie Sang, Kejing Yang, Yiming Zhang, Zhen Li, Guanghao Zhu, Zeyu Liu, Yang Yu, Yuhang Liu, Su Lu, Baoyi He, Qi Zhou, Xiaotian Han, Jianbo Yuan, Shengyu Zhang, Fei Wu, Hongxia Yang

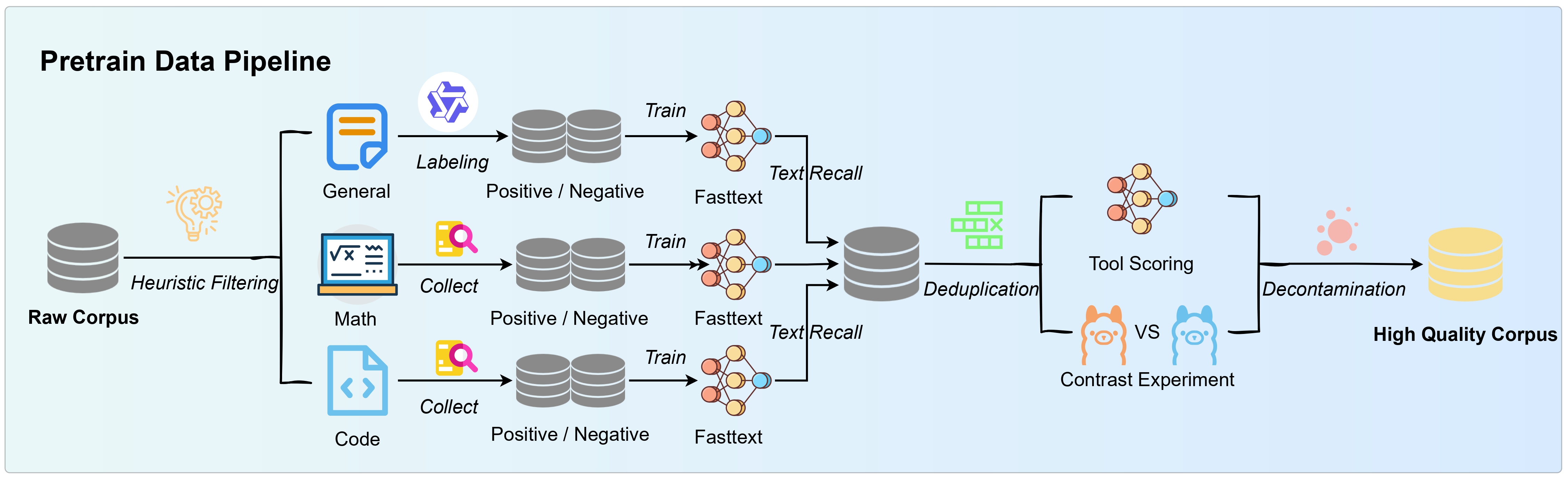

- This paper explores the development of efficient Small Language Models (SLMs) and Multimodal Small Language Models (MSLMs) that maintain strong reasoning abilities. It introduces an innovative training pipeline designed to enhance reasoning skills while enabling easy deployment on edge devices. The proposed approach achieves SoTA performance while keeping development costs low. InfR aims to improve AI systems by strengthening reasoning capabilities, lowering adoption barriers, and addressing privacy concerns through compact model sizes.

📖 Educations

- 2025.09 - present, Master of Philosophy, Department of Computing, The Hong Kong Polytechnic University.

- 2021.09 - 2025.06, Undergraduate, Intelligent Science and Technology, School of Automation Science and Engineering, South China University of Technology.

💻 Internships

- 2025.06 - 2025.09, Research Intern, Infix.ai, Shenzhen.